Definition

Containers are software packages with application code and all dependencies needed to run smoothly on any platform. They encapsulate applications in an environment where all required software is available (like specific versions of libraries and configuration files), decoupling the application environment from the host environment where it runs. Containers are a solution to the problem of reliably moving an application from one environment to another.

Docker is undoubtedly the most popular containerization technology. It’s a platform for developing, deploying, and managing containerized applications. Within the Docker ecosystem, there are several tools available to create, launch, and manage containers.

Overview

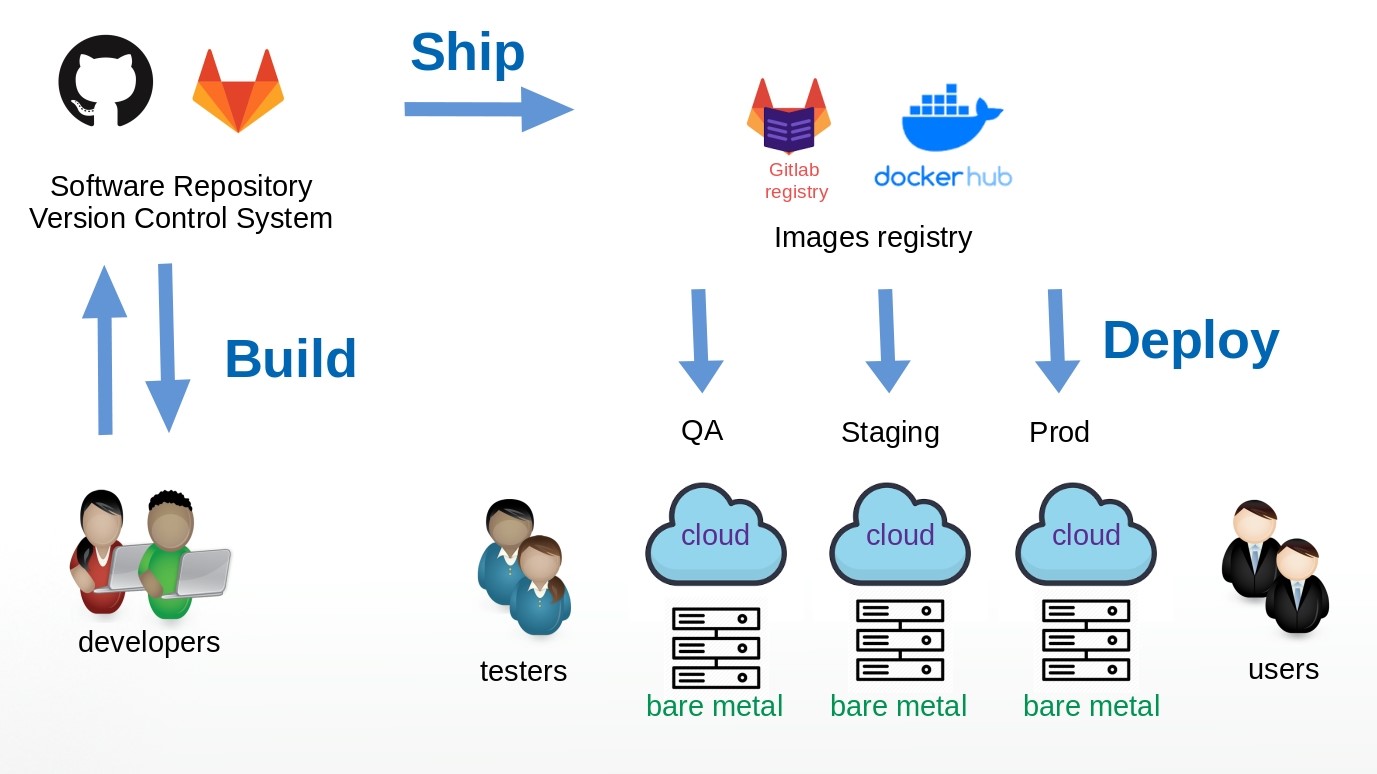

Docker provides the tools to efficiently implement a continuous integration and continuous delivery (CI/CD) workflow. As we can see in the picture below, the use of Docker containers allows us to build and ship the images after local testing in our environments. We can store Docker images in any registry and, from there, deploy them to any environment.

The deployment phase consists of launching the containers on the target environments. The first environment to deploy to should be quality assurance (QA), then the staging environment, and finally the production environment.

Docker provides a simple yet powerful CLI for building images, pushing them to a registry, pulling them from the registry to the target environment, and finally running the containers and performing the tests. How does that compare to having to keep an exact replica of all environments when working with bare-metal installations?

Docker containers are fast to start up (very few seconds) and their size is relatively small (usually in the range of 100 to 500 MB). The performance loss from running an application inside a container is negligible (in the order of two to three percent) compared to running it on bare metal. If we compare it with a virtual machine, the virtual machine start-up is much slower — it may take minutes. Additionally, its size is in the order of several gigabytes and the performance loss is about 10 to 15 percent (due to the hypervisor) compared to running on bare metal.

By using containers, we can consider splitting the application into several functional components, each of them running in a container providing a service in a micro-services architecture. This architecture has benefits over monolithic architectures, like better in-service performance and more flexible scalability. Eventually, this makes better use of computing capacity.

A final question. Is Docker mandatory when working with containers? The answer is no.

Examples of alternatives to Docker for running containers images are RKT, Podman, or containerd.

How Docker Works

The underlying technology used by Docker is namespaces, which were originally available in Linux kernels. Docker uses namespaces to create an isolated environment for processes and user privileges or system resources like disks or networks.

In other words, a container is a set of namespaces where processes and users are isolated. We can configure them to share resources like disk space or network interfaces. Managing the sets of namespaces needed for a container is the task of the Docker daemon.

The picture below shows how the Docker architecture differs from virtual machine architecture.

Docker is like a thin layer between the application and the host OS. It basically provides an isolated space for each container, creating an abstraction of the host OS. It’s important to note that the platform or application must be compatible with the host OS. We can’t run a Windows application on a Linux server, or vice versa. This is what makes a container’s performance so efficient compared to virtual machines.

On the other hand, in the virtual machine architecture, we can see the hypervisor as a thick layer that abstracts the infrastructure below (typically a bare metal server), and the guest OS must run on top of it. This allows running applications based on different OS on the same infrastructure.

Docker Architecture

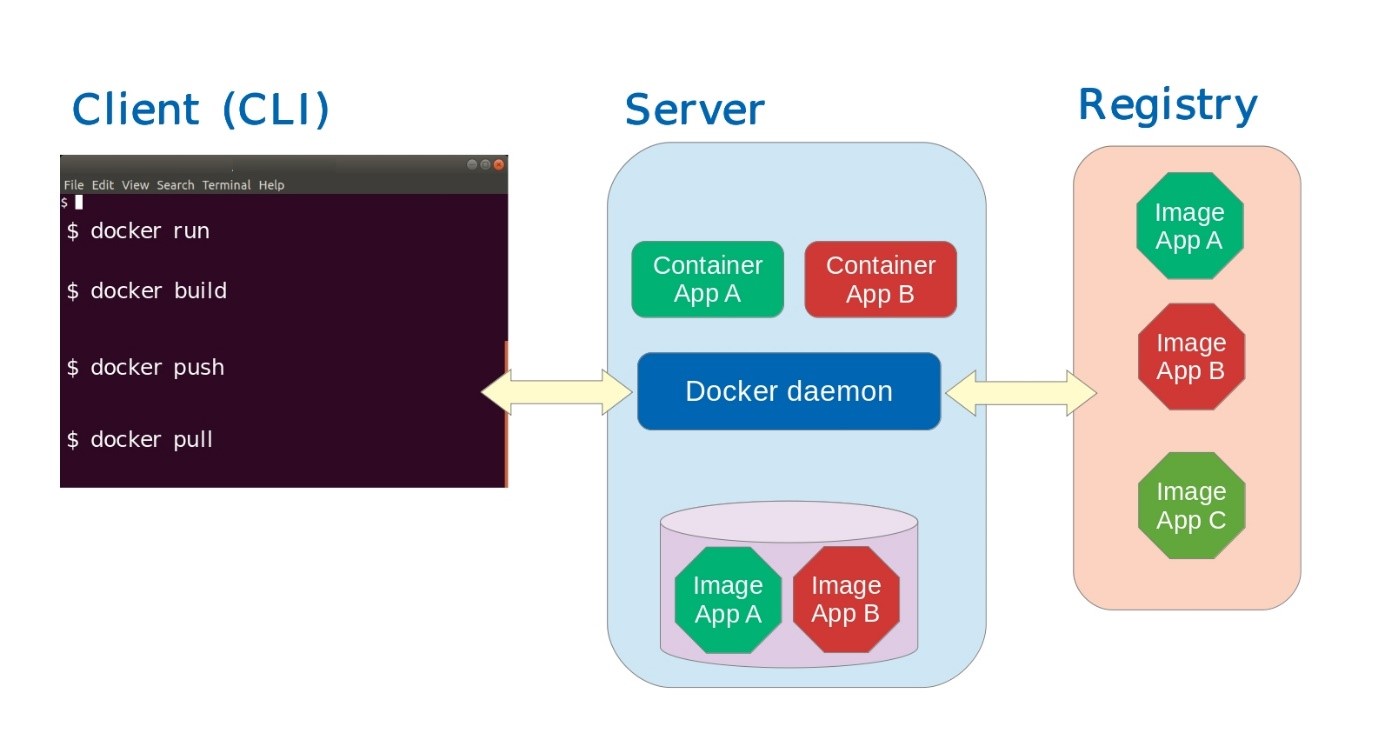

Docker architecture follows the client-server pattern. The client can use a CLI (command-line interface) or an API to communicate with the server (the Docker daemon). Additionally, the server has a local repository for images and it manages a remote registry to which we push and pull images.

The Docker client is the most common way to interact with the server using commands. Communication with the server (the daemon, like dockerd, for example) is through the Docker API. We can issue commands to build a Docker image (docker build), push or pull the image to or from a registry (docker push, docker pull), run a container from an image (docker run), and set up and manage shared resources between the containers and the host, like disk space (docker volume) or networks (docker network).

The Docker server is implemented by the daemon (for example, dockerd or containerd), which executes the orders coming from clients through the API. It can also communicate with other daemons to manage cluster-like services.

The Docker registry is a central place for storing and retrieving images. By default, Docker commands use Docker Hub, but we can use other publicly available registries or set up our own.

Docker Images and Containers

A Docker image is a template with instructions on how to build a container. We can build a Docker image by editing a Dockerfile and writing instructions about the software platform and applications we want to add to the container, including a base image as a starting point.

For instance, if our application is a backend based on Apache Tomcat, we may want to use the official Apache Tomcat image and add our application software, configuration files, and so on.

A container is a running instance of an image. We can run several containers from the same image simultaneously. We can configure a container at the start time to use certain system resources that we can share among other containers.

Examples of Using Docker

Examples of scenarios where Docker would be an ideal solution would be:

- Implementation of CI/CD processes in development, staging, and production environments.

- Solutions that are based on micro-services architecture, where we deploy the different components in their own containers so they can scale up and down independently.

- Solutions that require quick and flexible scalability in computing resources, like streaming services for live events or e-commerce services during marketing promotions.

- Solutions that require low latency, like game servers or content delivery, can benefit from the Docker containers, as they efficiently use computing resources.

If we want to run Docker locally, there are a couple of interesting alternatives:

- Docker Desktop, available on Windows 10 and 11, and macOS, is a development suite by Docker that integrates several tools. We can execute vulnerability scanning on the Docker images, manage volumes, control access to images, set up and manage a Kubernetes cluster and easily deploy containers on Azure, among other things.

- Rancher Desktop, available on Windows, macOS, and Linux is an open-source application for the desktop where we can easily build, push and pull images, run individual containers, or set up and manage clusters of containers.

When we run Docker in a production environment, we can manage the containers manually or using an orchestration tool, such as Docker Swarm or Kubernetes. If we have a few containers (less than five) to deploy on one or two hosts, we can start and stop them manually. If we have between tens and hundreds of containers to deploy on several hosts, we probably want to use Docker Swarm or Kubernetes.

Key Takeaways

- Docker is the most popular technology used to implement containers. Therefore, it has a key role in CI/CD processes or micro-services architectures.

- Containerized applications make an efficient use of computing resources and can benefit from the flexible scalability possibilities that containers offer, either manually handled or using orchestration tools like Docker Swarm or Kubernetes.

- Docker containers are an ideal solution for applications that must deliver high-performance and low latency. Furthermore, placing the “containerized” applications on an edge computing platform, such as StackPath, makes them unbeatable.

If you’re interested in developing expert technical content that performs, let’s have a conversation today.